14. FCN-8 Architecture

FCN-8, Architecture

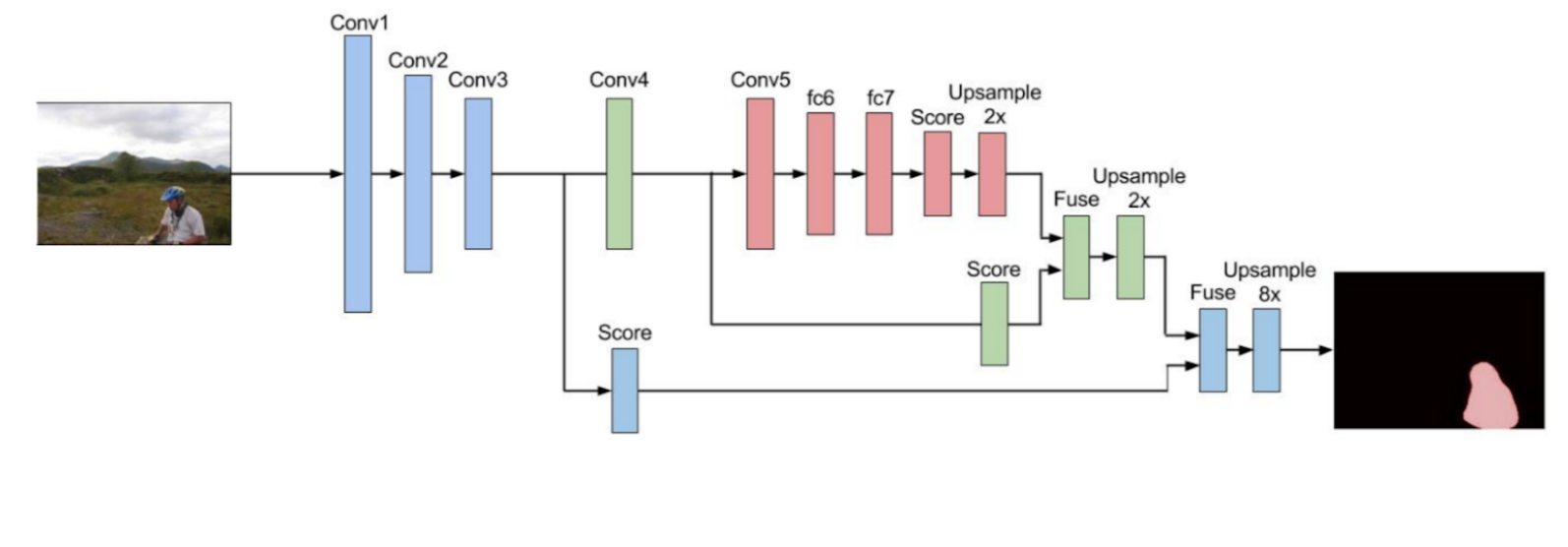

Let’s focus on a concrete implementation of a fully convolutional network. We’ll discuss the FCN-8 architecture developed at Berkeley. In fact, many FCN models are derived from this FCN-8 implementation. The encoder for FCN-8 is the VGG16 model pretrained on ImageNet for classification. The fully-connected "score" layers are replaced by 1-by-1 convolutions. The code for convolutional score layer like looks like:

self.score = nn.Conv2d(input_size, num_classes, 1)The complete architecture is pictured below.

There are skip connections and various upsampling layers to keep track of a variety of spatial information. In practice this network is often broken down into the encoder portion with parameters from VGG net, and a decoder portion, which includes the upsampling layers.

FCN-8, Decoder Portion

To build the decoder portion of FCN-8, we’ll upsample the input, that comes out of the convolutional layers taken from VGG net, to the original image size. The shape of the tensor after the final convolutional transpose layer will be 4-dimensional: (batch_size, original_height, original_width, num_classes).

To define a transposed convolutional layer for upsampling, we can write the following code, where 3 is the size of the convolving kernel:

self.transpose = nn.ConvTranspose2d(input_depth, num_classes, 3, stride=2)The transpose convolutional layers increase the height and width dimensions of the 4D input Tensor. And you can look at the PyTorch documentation, here.

Skip Connections

The final step is adding skip connections to the model. In order to do this we’ll combine the output of two layers. The first output is the output of the current layer. The second output is the output of a layer further back in the network, typically a pooling layer.

In the following example we combine the result of the previous layer with the result of the 4th pooling layer through elementwise addition (tf.add).

# the shapes of these two layers must be the same to add them

input = input + pool_4We can then follow this with a call to our transpose layer.

input = self.transpose(input)We can repeat this process, upsampling and creating the necssary skip connections, until the network is complete!

# FCN-8, Classification & Loss

The final step is to define a loss. That way, we can approach training a FCN just like we would approach training a normal classification CNN.

In the case of a FCN, the goal is to assign each pixel to the appropriate class. We already happen to know a great loss function for this setup, cross entropy loss!

Remember the output tensor is 4D so before we perform the loss, we have to reshape it to 2D, where each row represents a pixel and each column a class. From here we can just use standard cross entropy loss.

That’s it, we now have an idea of how to create an end-to-end model for semantic segmentation. Check out the original paper to read more specifics about FCN-8.